Workflows

Overview

A Workflow is a non-sequential DAG that can be used for complex concurrent scenarios with tasks having multiple inputs.

You can access the final output of the Workflow by using the output attribute.

Context

Workflows have access to the following context variables in addition to the base context:

task_outputs: dictionary containing mapping of all task IDs to their outputs.parent_outputs: dictionary containing mapping of parent task IDs to their outputs.parents_output_text: string containing the concatenated outputs of all parent tasks.parents: dictionary containing mapping of parent task IDs to their task objects.children: dictionary containing mapping of child task IDs to their task objects.

Workflow

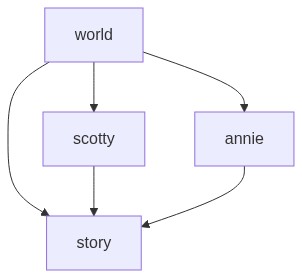

Let's build a simple workflow. Let's say, we want to write a story in a fantasy world with some unique characters. We could setup a workflow that generates a world based on some keywords. Then we pass the world description to any number of child tasks that create characters. Finally, the last task pulls in information from all parent tasks and writes up a short story.

from griptape.structures import Workflow from griptape.tasks import PromptTask from griptape.utils import StructureVisualizer world_task = PromptTask( "Create a fictional world based on the following key words {{ keywords|join(', ') }}", context={"keywords": ["fantasy", "ocean", "tidal lock"]}, id="world", ) def character_task(task_id: str, character_name: str) -> PromptTask: return PromptTask( "Based on the following world description create a character named {{ name }}:\n{{ parent_outputs['world'] }}", context={"name": character_name}, id=task_id, parent_ids=["world"], ) scotty_task = character_task("scotty", "Scotty") annie_task = character_task("annie", "Annie") story_task = PromptTask( "Based on the following description of the world and characters, write a short story:\n{{ parent_outputs['world'] }}\n{{ parent_outputs['scotty'] }}\n{{ parent_outputs['annie'] }}", id="story", parent_ids=["world", "scotty", "annie"], ) workflow = Workflow(tasks=[world_task, story_task, scotty_task, annie_task, story_task]) print(StructureVisualizer(workflow).to_url()) workflow.run()

https://mermaid.ink/svg/Z3JhcGggVEQ7CglXb3JsZC0tPiBTdG9yeSAmIFNjb3R0eSAmIEFubmllOwoJU3Rvcnk7CglTY290dHktLT4gU3Rvcnk7CglBbm5pZS0tPiBTdG9yeTs=

[02/27/25 20:26:53] INFO PromptTask world

Input: Create a fictional world based on the

following key words fantasy, ocean, tidal lock

[02/27/25 20:27:05] INFO PromptTask world

Output: In the vast expanse of the cosmos lies a

unique planet named Thalassara, a world defined by

its eternal dance with the ocean and the stars.

Thalassara is a tidal-locked planet, meaning one

side perpetually faces its sun, Solara, while the

other remains in eternal night. This celestial

configuration has given rise to a world of stark

contrasts and wondrous beauty, where fantasy and

reality intertwine.

### Geography

**The Sunlit Realm:**

The side of Thalassara that faces Solara is known

as the Sunlit Realm. Here, the ocean is a

shimmering expanse of turquoise and azure, teeming

with vibrant marine life. The coastline is dotted

with towering cliffs and lush, verdant forests that

thrive under the constant sunlight. The air is warm

and filled with the scent of salt and blooming

flowers. The Sunlit Realm is home to the Solari, a

race of beings with golden skin and hair that glows

like the sun. They are skilled artisans and

architects, building magnificent cities of crystal

and stone that reflect the light in dazzling

patterns.

**The Twilight Belt:**

Encircling the planet is the Twilight Belt, a

narrow region where day and night meet in perpetual

dusk. This area is a place of mystery and magic,

where the sky is painted in hues of purple and

orange, and the stars are always visible. The

Twilight Belt is a land of rolling hills and

ancient ruins, where the Veilwalkers, a nomadic

people with the ability to manipulate shadows, make

their home. They are the keepers of ancient

knowledge and guardians of the balance between

light and dark.

**The Nightward Expanse:**

On the dark side of Thalassara lies the Nightward

Expanse, a realm of eternal night illuminated only

by the glow of bioluminescent flora and fauna. The

ocean here is a deep indigo, and the land is

covered in forests of towering, luminescent trees.

The Nocturnals, a race with skin as dark as the

night and eyes that gleam like stars, inhabit this

region. They are skilled navigators and

astronomers, using the constellations to guide

their way across the darkened seas.

### Culture and Society

**The Solari:**

The Solari are a vibrant and joyful people,

celebrating life with festivals and music. They

worship Solara, the sun goddess, and believe that

their purpose is to bring light and beauty to the

world. Their society is built on creativity and

innovation, with a strong emphasis on art and

architecture.

**The Veilwalkers:**

The Veilwalkers are a mysterious and enigmatic

people, known for their wisdom and connection to

the magical energies of Thalassara. They are

skilled in the art of shadow-weaving, using their

abilities to protect the balance between the

realms. Their society is based on a deep respect

for nature and the ancient traditions passed down

through generations.

**The Nocturnals:**

The Nocturnals are a contemplative and

introspective people, valuing knowledge and

exploration. They worship the stars and believe

that their destiny is written in the

constellations. Their society is centered around

learning and discovery, with a strong emphasis on

astronomy and navigation.

### Magic and Mysticism

Magic is an integral part of life on Thalassara,

with each region possessing its own unique form of

mystical energy. The Sunlit Realm is imbued with

solar magic, allowing the Solari to harness the

power of light and heat. The Twilight Belt is a

place of shadow magic, where the Veilwalkers can

manipulate darkness and illusion. The Nightward

Expanse is suffused with stellar magic, enabling

the Nocturnals to draw power from the stars and the

night sky.

### Conclusion

Thalassara is a world of contrasts and harmony,

where the ocean and the stars shape the lives of

its inhabitants. It is a place where fantasy and

reality blend seamlessly, creating a tapestry of

wonder and enchantment. In this world, the light

and the dark coexist in a delicate balance, each

side contributing to the beauty and mystery of

Thalassara.

INFO PromptTask scotty

Input: Based on the following world description

create a character named Scotty:

In the vast expanse of the cosmos lies a unique

planet named Thalassara, a world defined by its

eternal dance with the ocean and the stars.

Thalassara is a tidal-locked planet, meaning one

side perpetually faces its sun, Solara, while the

other remains in eternal night. This celestial

configuration has given rise to a world of stark

contrasts and wondrous beauty, where fantasy and

reality intertwine.

### Geography

**The Sunlit Realm:**

The side of Thalassara that faces Solara is known

as the Sunlit Realm. Here, the ocean is a

shimmering expanse of turquoise and azure, teeming

with vibrant marine life. The coastline is dotted

with towering cliffs and lush, verdant forests that

thrive under the constant sunlight. The air is warm

and filled with the scent of salt and blooming

flowers. The Sunlit Realm is home to the Solari, a

race of beings with golden skin and hair that glows

like the sun. They are skilled artisans and

architects, building magnificent cities of crystal

and stone that reflect the light in dazzling

patterns.

**The Twilight Belt:**

Encircling the planet is the Twilight Belt, a

narrow region where day and night meet in perpetual

dusk. This area is a place of mystery and magic,

where the sky is painted in hues of purple and

orange, and the stars are always visible. The

Twilight Belt is a land of rolling hills and

ancient ruins, where the Veilwalkers, a nomadic

people with the ability to manipulate shadows, make

their home. They are the keepers of ancient

knowledge and guardians of the balance between

light and dark.

**The Nightward Expanse:**

On the dark side of Thalassara lies the Nightward

Expanse, a realm of eternal night illuminated only

by the glow of bioluminescent flora and fauna. The

ocean here is a deep indigo, and the land is

covered in forests of towering, luminescent trees.

The Nocturnals, a race with skin as dark as the

night and eyes that gleam like stars, inhabit this

region. They are skilled navigators and

astronomers, using the constellations to guide

their way across the darkened seas.

### Culture and Society

**The Solari:**

The Solari are a vibrant and joyful people,

celebrating life with festivals and music. They

worship Solara, the sun goddess, and believe that

their purpose is to bring light and beauty to the

world. Their society is built on creativity and

innovation, with a strong emphasis on art and

architecture.

**The Veilwalkers:**

The Veilwalkers are a mysterious and enigmatic

people, known for their wisdom and connection to

the magical energies of Thalassara. They are

skilled in the art of shadow-weaving, using their

abilities to protect the balance between the

realms. Their society is based on a deep respect

for nature and the ancient traditions passed down

through generations.

**The Nocturnals:**

The Nocturnals are a contemplative and

introspective people, valuing knowledge and

exploration. They worship the stars and believe

that their destiny is written in the

constellations. Their society is centered around

learning and discovery, with a strong emphasis on

astronomy and navigation.

### Magic and Mysticism

Magic is an integral part of life on Thalassara,

with each region possessing its own unique form of

mystical energy. The Sunlit Realm is imbued with

solar magic, allowing the Solari to harness the

power of light and heat. The Twilight Belt is a

place of shadow magic, where the Veilwalkers can

manipulate darkness and illusion. The Nightward

Expanse is suffused with stellar magic, enabling

the Nocturnals to draw power from the stars and the

night sky.

### Conclusion

Thalassara is a world of contrasts and harmony,

where the ocean and the stars shape the lives of

its inhabitants. It is a place where fantasy and

reality blend seamlessly, creating a tapestry of

wonder and enchantment. In this world, the light

and the dark coexist in a delicate balance, each

side contributing to the beauty and mystery of

Thalassara.

INFO PromptTask annie

Input: Based on the following world description

create a character named Annie:

In the vast expanse of the cosmos lies a unique

planet named Thalassara, a world defined by its

eternal dance with the ocean and the stars.

Thalassara is a tidal-locked planet, meaning one

side perpetually faces its sun, Solara, while the

other remains in eternal night. This celestial

configuration has given rise to a world of stark

contrasts and wondrous beauty, where fantasy and

reality intertwine.

### Geography

**The Sunlit Realm:**

The side of Thalassara that faces Solara is known

as the Sunlit Realm. Here, the ocean is a

shimmering expanse of turquoise and azure, teeming

with vibrant marine life. The coastline is dotted

with towering cliffs and lush, verdant forests that

thrive under the constant sunlight. The air is warm

and filled with the scent of salt and blooming

flowers. The Sunlit Realm is home to the Solari, a

race of beings with golden skin and hair that glows

like the sun. They are skilled artisans and

architects, building magnificent cities of crystal

and stone that reflect the light in dazzling

patterns.

**The Twilight Belt:**

Encircling the planet is the Twilight Belt, a

narrow region where day and night meet in perpetual

dusk. This area is a place of mystery and magic,

where the sky is painted in hues of purple and

orange, and the stars are always visible. The

Twilight Belt is a land of rolling hills and

ancient ruins, where the Veilwalkers, a nomadic

people with the ability to manipulate shadows, make

their home. They are the keepers of ancient

knowledge and guardians of the balance between

light and dark.

**The Nightward Expanse:**

On the dark side of Thalassara lies the Nightward

Expanse, a realm of eternal night illuminated only

by the glow of bioluminescent flora and fauna. The

ocean here is a deep indigo, and the land is

covered in forests of towering, luminescent trees.

The Nocturnals, a race with skin as dark as the

night and eyes that gleam like stars, inhabit this

region. They are skilled navigators and

astronomers, using the constellations to guide

their way across the darkened seas.

### Culture and Society

**The Solari:**

The Solari are a vibrant and joyful people,

celebrating life with festivals and music. They

worship Solara, the sun goddess, and believe that

their purpose is to bring light and beauty to the

world. Their society is built on creativity and

innovation, with a strong emphasis on art and

architecture.

**The Veilwalkers:**

The Veilwalkers are a mysterious and enigmatic

people, known for their wisdom and connection to

the magical energies of Thalassara. They are

skilled in the art of shadow-weaving, using their

abilities to protect the balance between the

realms. Their society is based on a deep respect

for nature and the ancient traditions passed down

through generations.

**The Nocturnals:**

The Nocturnals are a contemplative and

introspective people, valuing knowledge and

exploration. They worship the stars and believe

that their destiny is written in the

constellations. Their society is centered around

learning and discovery, with a strong emphasis on

astronomy and navigation.

### Magic and Mysticism

Magic is an integral part of life on Thalassara,

with each region possessing its own unique form of

mystical energy. The Sunlit Realm is imbued with

solar magic, allowing the Solari to harness the

power of light and heat. The Twilight Belt is a

place of shadow magic, where the Veilwalkers can

manipulate darkness and illusion. The Nightward

Expanse is suffused with stellar magic, enabling

the Nocturnals to draw power from the stars and the

night sky.

### Conclusion

Thalassara is a world of contrasts and harmony,

where the ocean and the stars shape the lives of

its inhabitants. It is a place where fantasy and

reality blend seamlessly, creating a tapestry of

wonder and enchantment. In this world, the light

and the dark coexist in a delicate balance, each

side contributing to the beauty and mystery of

Thalassara.

[02/27/25 20:27:14] INFO PromptTask scotty

Output: **Character Name:** Scotty

**Race:** Veilwalker

**Appearance:**

Scotty is a striking figure, with skin that seems

to shimmer with the hues of twilight—deep purples

and soft oranges that shift with the light. His

hair is a cascade of silver, reminiscent of

moonlight, and his eyes are a piercing shade of

amethyst, reflecting the mysteries of the dusk. He

wears garments woven from shadow silk, a fabric

unique to the Veilwalkers, which allows him to

blend seamlessly into the twilight landscape.

**Background:**

Born into a lineage of revered shadow-weavers,

Scotty has always felt a profound connection to the

magical energies of the Twilight Belt. From a young

age, he displayed an exceptional talent for

manipulating shadows, a skill that earned him the

respect of his peers and the attention of the

elders. His family, known for their wisdom and

dedication to preserving the balance between light

and dark, instilled in him a deep respect for the

ancient traditions and the natural world.

**Personality:**

Scotty is introspective and thoughtful, often lost

in contemplation of the mysteries of Thalassara. He

possesses a quiet confidence and a gentle demeanor,

preferring to observe and listen rather than

dominate conversations. Despite his calm exterior,

he harbors a fierce determination to protect the

delicate balance of his world. Scotty is deeply

empathetic, able to sense the emotions of those

around him, which makes him a compassionate and

understanding friend.

**Abilities:**

As a skilled shadow-weaver, Scotty can manipulate

darkness to create illusions, conceal himself, or

even form tangible constructs. His abilities are

not limited to mere trickery; he can also use

shadows to heal or protect, drawing on the magical

energies of the Twilight Belt. Scotty is also a

keeper of ancient knowledge, with a vast

understanding of the history and lore of

Thalassara, which he uses to guide his actions and

decisions.

**Goals and Motivations:**

Scotty is driven by a desire to maintain the

harmony between the realms of Thalassara. He seeks

to uncover the secrets of the ancient ruins

scattered across the Twilight Belt, believing that

they hold the key to understanding the true nature

of his world. His ultimate goal is to ensure that

the balance between light and dark is preserved,

allowing the beauty and magic of Thalassara to

endure for generations to come.

**Relationships:**

Scotty has a close bond with his fellow

Veilwalkers, particularly his mentor, an elder

named Lirael, who has guided him in honing his

shadow-weaving abilities. He also shares a deep

friendship with a Nocturnal astronomer named Kael,

with whom he often exchanges knowledge and insights

about the stars and the night sky. Though he is

more reserved with the Solari, Scotty respects

their artistry and occasionally collaborates with

them on projects that blend light and shadow.

**Quirks:**

Scotty has a habit of speaking in metaphors, often

drawing on the imagery of the twilight and the

stars to convey his thoughts. He also carries a

small, intricately carved obsidian pendant, a

family heirloom that he believes connects him to

the spirits of his ancestors. When deep in thought,

he can often be found tracing the patterns of the

constellations with his fingers, a gesture that

calms and centers him.

[02/27/25 20:27:17] INFO PromptTask annie

Output: **Character Name:** Annie

**Race:** Veilwalker

**Appearance:**

Annie possesses the ethereal beauty characteristic

of the Veilwalkers. Her skin is a soft, dusky hue,

reminiscent of the twilight sky, and her long,

flowing hair shimmers with shades of deep purple

and silver, like the first stars appearing at dusk.

Her eyes are a striking violet, reflecting the

perpetual twilight of her homeland, and they seem

to hold the secrets of the ages.

**Background:**

Born into a family of renowned shadow-weavers,

Annie grew up amidst the rolling hills and ancient

ruins of the Twilight Belt. Her parents were

respected guardians of the balance between light

and dark, and they instilled in her a deep respect

for the natural world and the ancient traditions of

their people. From a young age, Annie showed a

natural affinity for shadow magic, often seen

weaving intricate patterns of darkness and light

with ease.

**Personality:**

Annie is introspective and thoughtful, often lost

in contemplation of the mysteries of Thalassara.

She possesses a quiet confidence and a gentle

demeanor, making her a calming presence among her

peers. Despite her serene exterior, she harbors a

fierce determination to protect the delicate

balance of her world. Annie is deeply curious,

always seeking to learn more about the ancient

knowledge of her people and the magical energies

that flow through Thalassara.

**Abilities:**

As a skilled shadow-weaver, Annie can manipulate

darkness and illusion, creating mesmerizing

displays of light and shadow. She has honed her

abilities to blend seamlessly into her

surroundings, moving silently and unseen when

necessary. Her connection to the magical energies

of the Twilight Belt allows her to sense

disturbances in the balance between light and dark,

making her an invaluable guardian of her realm.

**Goals:**

Annie is driven by a desire to preserve the harmony

of Thalassara and to uncover the hidden truths of

her world. She dreams of bridging the gap between

the different realms, fostering understanding and

cooperation among the Solari, Veilwalkers, and

Nocturnals. Her ultimate goal is to ensure that the

beauty and mystery of Thalassara endure for

generations to come.

**Relationships:**

Annie maintains strong ties with her family and the

elders of her community, often seeking their

guidance and wisdom. She has formed a close bond

with a Nocturnal astronomer named Kael, with whom

she shares a mutual fascination for the stars and

the mysteries they hold. Together, they explore the

boundaries of their respective magics, seeking to

unlock the secrets of the cosmos.

**Role in the World:**

Annie serves as a bridge between the realms, using

her shadow-weaving abilities to maintain the

balance between light and dark. She is a keeper of

ancient knowledge and a protector of the natural

world, ensuring that the harmony of Thalassara is

preserved. Her journey is one of discovery and

enlightenment, as she seeks to unravel the

mysteries of her world and her place within it.

INFO PromptTask story

Input: Based on the following description of the

world and characters, write a short story:

In the vast expanse of the cosmos lies a unique

planet named Thalassara, a world defined by its

eternal dance with the ocean and the stars.

Thalassara is a tidal-locked planet, meaning one

side perpetually faces its sun, Solara, while the

other remains in eternal night. This celestial

configuration has given rise to a world of stark

contrasts and wondrous beauty, where fantasy and

reality intertwine.

### Geography

**The Sunlit Realm:**

The side of Thalassara that faces Solara is known

as the Sunlit Realm. Here, the ocean is a

shimmering expanse of turquoise and azure, teeming

with vibrant marine life. The coastline is dotted

with towering cliffs and lush, verdant forests that

thrive under the constant sunlight. The air is warm

and filled with the scent of salt and blooming

flowers. The Sunlit Realm is home to the Solari, a

race of beings with golden skin and hair that glows

like the sun. They are skilled artisans and

architects, building magnificent cities of crystal

and stone that reflect the light in dazzling

patterns.

**The Twilight Belt:**

Encircling the planet is the Twilight Belt, a

narrow region where day and night meet in perpetual

dusk. This area is a place of mystery and magic,

where the sky is painted in hues of purple and

orange, and the stars are always visible. The

Twilight Belt is a land of rolling hills and

ancient ruins, where the Veilwalkers, a nomadic

people with the ability to manipulate shadows, make

their home. They are the keepers of ancient

knowledge and guardians of the balance between

light and dark.

**The Nightward Expanse:**

On the dark side of Thalassara lies the Nightward

Expanse, a realm of eternal night illuminated only

by the glow of bioluminescent flora and fauna. The

ocean here is a deep indigo, and the land is

covered in forests of towering, luminescent trees.

The Nocturnals, a race with skin as dark as the

night and eyes that gleam like stars, inhabit this

region. They are skilled navigators and

astronomers, using the constellations to guide

their way across the darkened seas.

### Culture and Society

**The Solari:**

The Solari are a vibrant and joyful people,

celebrating life with festivals and music. They

worship Solara, the sun goddess, and believe that

their purpose is to bring light and beauty to the

world. Their society is built on creativity and

innovation, with a strong emphasis on art and

architecture.

**The Veilwalkers:**

The Veilwalkers are a mysterious and enigmatic

people, known for their wisdom and connection to

the magical energies of Thalassara. They are

skilled in the art of shadow-weaving, using their

abilities to protect the balance between the

realms. Their society is based on a deep respect

for nature and the ancient traditions passed down

through generations.

**The Nocturnals:**

The Nocturnals are a contemplative and

introspective people, valuing knowledge and

exploration. They worship the stars and believe

that their destiny is written in the

constellations. Their society is centered around

learning and discovery, with a strong emphasis on

astronomy and navigation.

### Magic and Mysticism

Magic is an integral part of life on Thalassara,

with each region possessing its own unique form of

mystical energy. The Sunlit Realm is imbued with

solar magic, allowing the Solari to harness the

power of light and heat. The Twilight Belt is a

place of shadow magic, where the Veilwalkers can

manipulate darkness and illusion. The Nightward

Expanse is suffused with stellar magic, enabling

the Nocturnals to draw power from the stars and the

night sky.

### Conclusion

Thalassara is a world of contrasts and harmony,

where the ocean and the stars shape the lives of

its inhabitants. It is a place where fantasy and

reality blend seamlessly, creating a tapestry of

wonder and enchantment. In this world, the light

and the dark coexist in a delicate balance, each

side contributing to the beauty and mystery of

Thalassara.

**Character Name:** Scotty

**Race:** Veilwalker

**Appearance:**

Scotty is a striking figure, with skin that seems

to shimmer with the hues of twilight—deep purples

and soft oranges that shift with the light. His

hair is a cascade of silver, reminiscent of

moonlight, and his eyes are a piercing shade of

amethyst, reflecting the mysteries of the dusk. He

wears garments woven from shadow silk, a fabric

unique to the Veilwalkers, which allows him to

blend seamlessly into the twilight landscape.

**Background:**

Born into a lineage of revered shadow-weavers,

Scotty has always felt a profound connection to the

magical energies of the Twilight Belt. From a young

age, he displayed an exceptional talent for

manipulating shadows, a skill that earned him the

respect of his peers and the attention of the

elders. His family, known for their wisdom and

dedication to preserving the balance between light

and dark, instilled in him a deep respect for the

ancient traditions and the natural world.

**Personality:**

Scotty is introspective and thoughtful, often lost

in contemplation of the mysteries of Thalassara. He

possesses a quiet confidence and a gentle demeanor,

preferring to observe and listen rather than

dominate conversations. Despite his calm exterior,

he harbors a fierce determination to protect the

delicate balance of his world. Scotty is deeply

empathetic, able to sense the emotions of those

around him, which makes him a compassionate and

understanding friend.

**Abilities:**

As a skilled shadow-weaver, Scotty can manipulate

darkness to create illusions, conceal himself, or

even form tangible constructs. His abilities are

not limited to mere trickery; he can also use

shadows to heal or protect, drawing on the magical

energies of the Twilight Belt. Scotty is also a

keeper of ancient knowledge, with a vast

understanding of the history and lore of

Thalassara, which he uses to guide his actions and

decisions.

**Goals and Motivations:**

Scotty is driven by a desire to maintain the

harmony between the realms of Thalassara. He seeks

to uncover the secrets of the ancient ruins

scattered across the Twilight Belt, believing that

they hold the key to understanding the true nature

of his world. His ultimate goal is to ensure that

the balance between light and dark is preserved,

allowing the beauty and magic of Thalassara to

endure for generations to come.

**Relationships:**

Scotty has a close bond with his fellow

Veilwalkers, particularly his mentor, an elder

named Lirael, who has guided him in honing his

shadow-weaving abilities. He also shares a deep

friendship with a Nocturnal astronomer named Kael,

with whom he often exchanges knowledge and insights

about the stars and the night sky. Though he is

more reserved with the Solari, Scotty respects

their artistry and occasionally collaborates with

them on projects that blend light and shadow.

**Quirks:**

Scotty has a habit of speaking in metaphors, often

drawing on the imagery of the twilight and the

stars to convey his thoughts. He also carries a

small, intricately carved obsidian pendant, a

family heirloom that he believes connects him to

the spirits of his ancestors. When deep in thought,

he can often be found tracing the patterns of the

constellations with his fingers, a gesture that

calms and centers him.

**Character Name:** Annie

**Race:** Veilwalker

**Appearance:**

Annie possesses the ethereal beauty characteristic

of the Veilwalkers. Her skin is a soft, dusky hue,

reminiscent of the twilight sky, and her long,

flowing hair shimmers with shades of deep purple

and silver, like the first stars appearing at dusk.

Her eyes are a striking violet, reflecting the

perpetual twilight of her homeland, and they seem

to hold the secrets of the ages.

**Background:**

Born into a family of renowned shadow-weavers,

Annie grew up amidst the rolling hills and ancient

ruins of the Twilight Belt. Her parents were

respected guardians of the balance between light

and dark, and they instilled in her a deep respect

for the natural world and the ancient traditions of

their people. From a young age, Annie showed a

natural affinity for shadow magic, often seen

weaving intricate patterns of darkness and light

with ease.

**Personality:**

Annie is introspective and thoughtful, often lost

in contemplation of the mysteries of Thalassara.

She possesses a quiet confidence and a gentle

demeanor, making her a calming presence among her

peers. Despite her serene exterior, she harbors a

fierce determination to protect the delicate

balance of her world. Annie is deeply curious,

always seeking to learn more about the ancient

knowledge of her people and the magical energies

that flow through Thalassara.

**Abilities:**

As a skilled shadow-weaver, Annie can manipulate

darkness and illusion, creating mesmerizing

displays of light and shadow. She has honed her

abilities to blend seamlessly into her

surroundings, moving silently and unseen when

necessary. Her connection to the magical energies

of the Twilight Belt allows her to sense

disturbances in the balance between light and dark,

making her an invaluable guardian of her realm.

**Goals:**

Annie is driven by a desire to preserve the harmony

of Thalassara and to uncover the hidden truths of

her world. She dreams of bridging the gap between

the different realms, fostering understanding and

cooperation among the Solari, Veilwalkers, and

Nocturnals. Her ultimate goal is to ensure that the

beauty and mystery of Thalassara endure for

generations to come.

**Relationships:**

Annie maintains strong ties with her family and the

elders of her community, often seeking their

guidance and wisdom. She has formed a close bond

with a Nocturnal astronomer named Kael, with whom

she shares a mutual fascination for the stars and

the mysteries they hold. Together, they explore the

boundaries of their respective magics, seeking to

unlock the secrets of the cosmos.

**Role in the World:**

Annie serves as a bridge between the realms, using

her shadow-weaving abilities to maintain the

balance between light and dark. She is a keeper of

ancient knowledge and a protector of the natural

world, ensuring that the harmony of Thalassara is

preserved. Her journey is one of discovery and

enlightenment, as she seeks to unravel the

mysteries of her world and her place within it.

[02/27/25 20:27:27] INFO PromptTask story

Output: In the heart of the Twilight Belt, where

the sky was a perpetual canvas of purples and

oranges, Scotty and Annie walked side by side,

their footsteps silent on the ancient stone path.

The ruins of an old Veilwalker temple loomed ahead,

its shadowy silhouette blending seamlessly with the

dusk. The air was thick with the scent of

night-blooming flowers, and the distant call of a

nocturnal creature echoed through the hills.

Scotty paused, tracing the patterns of the

constellations with his fingers, a gesture that

always calmed him. "The stars are unusually bright

tonight," he remarked, his voice a soft murmur

against the backdrop of the twilight.

Annie nodded, her violet eyes reflecting the

starlight. "Perhaps they have something to tell

us," she replied, her voice carrying the weight of

ancient wisdom. She had always believed that the

stars held secrets, messages from the cosmos

waiting to be deciphered.

Their destination was the heart of the temple, a

place where the Veilwalkers believed the veil

between worlds was thinnest. It was here that

Scotty hoped to find answers, to uncover the

secrets of the ancient ruins that had long

intrigued him.

As they entered the temple, the air grew cooler,

and the shadows deepened. Scotty raised a hand,

weaving the darkness into a soft glow that

illuminated their path. The walls were adorned with

intricate carvings, depicting scenes of Veilwalkers

communing with the stars, their hands outstretched

to the heavens.

Annie ran her fingers over the carvings, her touch

gentle and reverent. "These stories are as old as

Thalassara itself," she mused. "They speak of a

time when the balance between light and dark was

first established."

Scotty nodded, his amethyst eyes scanning the

carvings for clues. "And perhaps they hold the key

to maintaining that balance," he said, his voice

filled with quiet determination.

Together, they moved deeper into the temple, their

footsteps echoing in the silence. At the center of

the chamber, they found a stone altar, its surface

etched with symbols that pulsed with a faint,

otherworldly light.

Scotty reached out, his fingers brushing the

symbols. As he did, a vision unfolded before him—a

tapestry of light and shadow, weaving together the

stories of Thalassara. He saw the Solari, their

cities gleaming in the sun; the Nocturnals,

navigating the darkened seas by starlight; and the

Veilwalkers, guardians of the balance, weaving

shadows to protect their world.

Annie watched, her heart swelling with awe and

understanding. "The balance is not just a concept,"

she whispered. "It's a living, breathing force,

woven into the very fabric of our world."

Scotty nodded, his eyes shining with newfound

clarity. "And it's up to us to preserve it," he

said, his voice filled with resolve.

As they left the temple, the stars seemed to shine

even brighter, as if acknowledging their vow.

Together, Scotty and Annie walked back into the

twilight, their hearts united in purpose. They knew

that the path ahead would not be easy, but they

were ready to face whatever challenges lay ahead,

guided by the light of the stars and the wisdom of

the ancients.

In the vast expanse of Thalassara, where the ocean

and the stars danced in eternal harmony, Scotty and

Annie found their place, as guardians of the

balance, protectors of the beauty and mystery of

their world. And as they journeyed onward, they

carried with them the hope that their efforts would

ensure the magic of Thalassara endured for

generations to come. Note that we use the StructureVisualizer to get a visual representation of the workflow. If we visit the printed url, it should look like this:

Info

Output edited for brevity

Declarative vs Imperative Syntax

The above example showed how to create a workflow using the declarative syntax via the parent_ids init param, but there are a number of declarative and imperative options for you to choose between. There is no functional difference, they merely exist to allow you to structure your code as is most readable for your use case. Possibilities are illustrated below.

Declaratively specify parents (same as above example):

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask workflow = Workflow( tasks=[ PromptTask("Name an animal", id="animal"), PromptTask("Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective", parent_ids=["animal"]), PromptTask("Name a {{ parent_outputs['adjective'] }} animal", id="new-animal", parent_ids=["adjective"]), ], rules=[Rule("output a single lowercase word")], ) workflow.run()

[02/27/25 20:27:58] INFO PromptTask animal

Input: Name an animal

INFO PromptTask animal

Output: tiger

INFO PromptTask adjective

Input: Describe tiger with an adjective

[02/27/25 20:27:59] INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name a striped animal

INFO PromptTask new-animal

Output: zebra Declaratively specify children:

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask workflow = Workflow( tasks=[ PromptTask("Name an animal", id="animal", child_ids=["adjective"]), PromptTask( "Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective", child_ids=["new-animal"] ), PromptTask("Name a {{ parent_outputs['adjective'] }} animal", id="new-animal"), ], rules=[Rule("output a single lowercase word")], ) workflow.run()

[02/27/25 20:25:44] INFO PromptTask animal

Input: Name an animal

INFO PromptTask animal

Output: tiger

INFO PromptTask adjective

Input: Describe tiger with an adjective

INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name a striped animal

[02/27/25 20:25:45] INFO PromptTask new-animal

Output: zebra Declaratively specifying a mix of parents and children:

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask workflow = Workflow( tasks=[ PromptTask("Name an animal", id="animal"), PromptTask( "Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective", parent_ids=["animal"], child_ids=["new-animal"], ), PromptTask("Name a {{ parent_outputs['adjective'] }} animal", id="new-animal"), ], rules=[Rule("output a single lowercase word")], ) workflow.run()

[02/27/25 20:27:31] INFO PromptTask animal

Input: Name an animal

[02/27/25 20:27:32] INFO PromptTask animal

Output: tiger

INFO PromptTask adjective

Input: Describe tiger with an adjective

[02/27/25 20:27:33] INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name a striped animal

INFO PromptTask new-animal

Output: zebra Imperatively specify parents:

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask animal_task = PromptTask("Name an animal", id="animal") adjective_task = PromptTask("Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective") new_animal_task = PromptTask("Name a {{ parent_outputs['adjective'] }} animal", id="new-animal") adjective_task.add_parent(animal_task) new_animal_task.add_parent(adjective_task) workflow = Workflow( tasks=[animal_task, adjective_task, new_animal_task], rules=[Rule("output a single lowercase word")], ) workflow.run()

[02/27/25 20:27:19] INFO PromptTask animal

Input: Name an animal

[02/27/25 20:27:20] INFO PromptTask animal

Output: tiger

INFO PromptTask adjective

Input: Describe tiger with an adjective

INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name a striped animal

[02/27/25 20:27:21] INFO PromptTask new-animal

Output: zebra Imperatively specify children:

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask animal_task = PromptTask("Name an animal", id="animal") adjective_task = PromptTask("Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective") new_animal_task = PromptTask("Name a {{ parent_outputs['adjective'] }} animal", id="new-animal") animal_task.add_child(adjective_task) adjective_task.add_child(new_animal_task) workflow = Workflow( tasks=[animal_task, adjective_task, new_animal_task], rules=[Rule("output a single lowercase word")], ) workflow.run()

[02/27/25 20:27:35] INFO PromptTask animal

Input: Name an animal

INFO PromptTask animal

Output: tiger

INFO PromptTask adjective

Input: Describe tiger with an adjective

INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name a striped animal

[02/27/25 20:27:36] INFO PromptTask new-animal

Output: zebra Imperatively specify a mix of parents and children:

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask workflow = Workflow( rules=[Rule("output a single lowercase word")], ) animal_task = PromptTask("Name an animal", id="animal", structure=workflow) adjective_task = PromptTask( "Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective", structure=workflow ) new_animal_task = PromptTask("Name a {{ parent_outputs['adjective'] }} animal", id="new-animal", structure=workflow) adjective_task.add_parent(animal_task) adjective_task.add_child(new_animal_task) workflow.run()

[02/27/25 20:27:14] INFO PromptTask animal

Input: Name an animal

INFO PromptTask animal

Output: tiger

INFO PromptTask adjective

Input: Describe tiger with an adjective

[02/27/25 20:27:15] INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name a striped animal

INFO PromptTask new-animal

Output: zebra Or even mix imperative and declarative:

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask animal_task = PromptTask("Name an animal", id="animal") adjective_task = PromptTask( "Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective", parent_ids=["animal"] ) new_animal_task = PromptTask("Name a {{ parent_outputs['adjective'] }} animal", id="new-animal") new_animal_task.add_parent(adjective_task) workflow = Workflow( tasks=[animal_task, adjective_task, new_animal_task], rules=[Rule("output a single lowercase word")], ) workflow.run()

[02/27/25 20:28:14] INFO PromptTask animal

Input: Name an animal

INFO PromptTask animal

Output: tiger

INFO PromptTask adjective

Input: Describe tiger with an adjective

[02/27/25 20:28:15] INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name a striped animal

INFO PromptTask new-animal

Output: zebra Insert Parallel Tasks

Workflow.insert_tasks() provides a convenient way to insert parallel tasks between parents and children.

Info

By default, all children are removed from the parent task and all parent tasks are removed from the child task. If you want to keep these parent-child relationships, then set the preserve_relationship parameter to True.

Imperatively insert parallel tasks between a parent and child:

from griptape.rules import Rule from griptape.structures import Workflow from griptape.tasks import PromptTask workflow = Workflow( rules=[Rule("output a single lowercase word")], ) animal_task = PromptTask("Name an animal", id="animal") adjective_task = PromptTask("Describe {{ parent_outputs['animal'] }} with an adjective", id="adjective") color_task = PromptTask("Describe {{ parent_outputs['animal'] }} with a color", id="color") new_animal_task = PromptTask("Name an animal described as: \n{{ parents_output_text }}", id="new-animal") # The following workflow runs animal_task, then (adjective_task, and color_task) # in parallel, then finally new_animal_task. # # In other words, the output of animal_task is passed to both adjective_task and color_task # and the outputs of adjective_task and color_task are then passed to new_animal_task. workflow.add_task(animal_task) workflow.add_task(new_animal_task) workflow.insert_tasks(animal_task, [adjective_task, color_task], new_animal_task) workflow.run()

[02/27/25 20:27:01] INFO PromptTask animal

Input: Name an animal

[02/27/25 20:27:05] INFO PromptTask animal

Output: tiger

INFO PromptTask color

Input: Describe tiger with a color

INFO PromptTask adjective

Input: Describe tiger with an adjective

INFO PromptTask color

Output: orange

INFO PromptTask adjective

Output: striped

INFO PromptTask new-animal

Input: Name an animal described as:

striped

orange

INFO PromptTask new-animal

Output: tiger Bitshift Composition

Task relationships can also be set up with the Python bitshift operators >> and <<. The following statements are all functionally equivalent:

task1 >> task2 task1.add_child(task2) task2 << task1 task2.add_parent(task1) task3 >> [task4, task5] task3.add_children([task4, task5])

When using the bitshift to compose operators, the relationship is set in the direction that the bitshift operator points.

For example, task1 >> task2 means that task1 runs first and task2 runs second.

Multiple operators can be composed – keep in mind the chain is executed left-to-right and the rightmost object is always returned. For example:

task1 >> task2 >> task3 << task4

is equivalent to:

task1.add_child(task2) task2.add_child(task3) task3.add_parent(task4)

Could this page be better? Report a problem or suggest an addition!